Not making anti-ChatGPT one's whole thing

ChatGPT and other "AI Art" are weapons against, respectively, meaning and artists, and as tech people we have a duty to communicate this. BUT.. we've seen this before.

Quick meta: I fell off the posting wagon which is probably fine for you, but not for me and my momentum, so I’m trying to build back up with some short posts.

I’ve watched the rising interest and adoption of ChatGPT and similar tools with slow horror and dread1, but also with a kind of wearied familiarity. As geeks we now seem to be stuck in a perpetual anti-hype cycle, where Silicon Valley and its global network of Venture Capitalists and Private Equity Firms sell the world on a new “disruptive” thing and all of the sane people have to point out why that thing does not, and can never do, what is promised of it.

Mostly it has been crypto and its hell spawn like NFTs, but we’ve also had the Unicorn era of “companies” designed specifically to break existing systems like regular employment, stable workspaces, public transport, mail and couriers; and “self-driving cars” that don’t and can’t; social media designed to hurt us; and now ChatGPT.

[Twitter disclaimer that these disruptions vary in malignancy and danger - their common nature is their use of an unquestioning pipeline of hype and legitimacy-building]

Sometimes these things are complicated tech such that, while the lay person might suspect yet another scam, they can’t prove it, and regular commentators simply publish PR lies uncritically or even shill for them. So it falls on the loud tech folk to find ways to communicate the scam and reassure people that their instincts are not, in fact, unfounded Fear, Uncertainty, and Doubt.

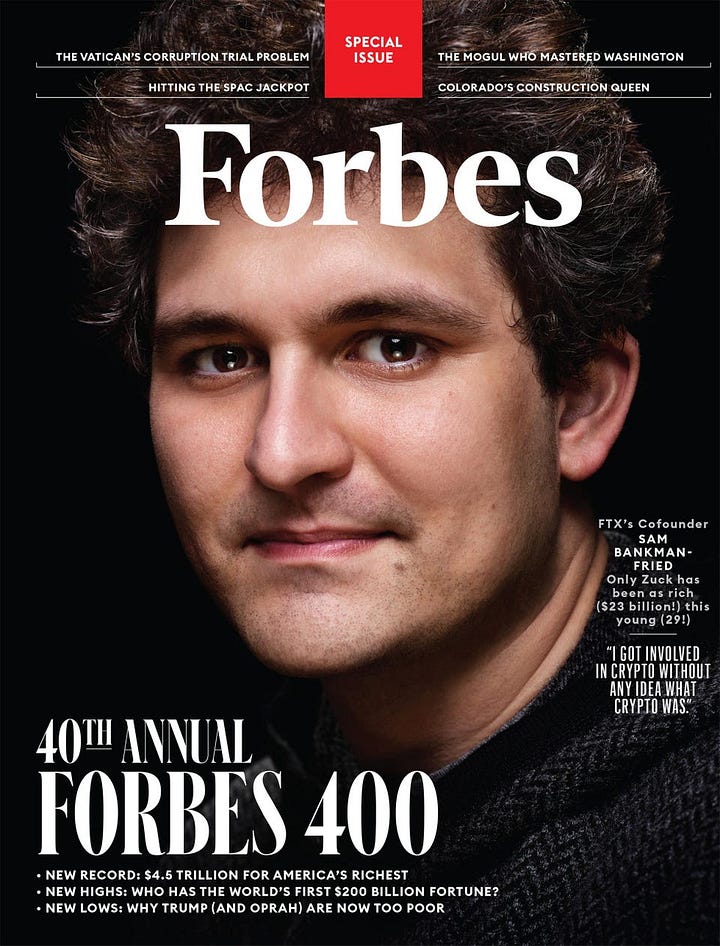

The problem is that these are voices in the dark. The hype engine they are countering is huge and well-funded. And so a magazine can run an uncritical puff piece for an alleged fraudster one month, but hey, next month they and their writers are onto the next thing.

And so it falls on regular nerdy folk like Molly White, Cory Doctorow, Folding Ideas and, to a more specialised extent Cas Piancey, David Gerard etc, to get pulled into having to refute and debunk yet another cycle of bullshit, lies, and often, fraud.

All the above have found ways to make it work for them - they probably all consider themselves more writers than anything else, and material is material. But it feels like so much energy is being spent, not by just the “thought-leaders”, but all of us that are duty-bound to protect our friends and family from getting hurt by these forces. It’s a rear-guard action.

And if crypto was a warm up, ChatGPT is the main event. Even middle-of-the-line, non-political tech people are starting to get a sense of how fucked things are going to get. ChatGPT and its cousins are, without exaggeration, weapons that break basically everything, possibly irreversibly.

As one small step to safeguarding knowledge, I grabbed a copy of Wikipedia a month ago. In many ways Wikipedia’s information is biased and imperfect (for the traditional reasons: white supremacy, colonialism, misogyny, liberalism), but it is still, for now, human knowledge and not plausible nonsense. I have a feeling that we will long for the relative reliability of knowledge in days in times to come.

How do we avoid becoming an ancient mariner and stopping one of three to convey the danger facing us? The fact of the matter is that I don’t know. We need a collective digital picket line, to promise to each other that we will not become scabs and use these tools, not even for “good” reasons. But we tried with crypto, and it didn’t save the many thousands that were scammed. So… who knows?

I fear this short post has grown long. To quote, apparently, Pascal in 1657:

“I have made this longer than usual because I have not time to make it shorter.”

Talk soon.

If you are confused by my loathing of ChatGPT, the best current summary was written by Dan McQuillan and I recommend you read it.

If you are worried about the Pascal quote ("apparently') I can put your mind at ease, it is genuine and a pretty good translation of a passage form letter 16 of the "Lettres provinciales "

[...] mes Lettres n'avaient pas accoutumé de se suivre de si près, ni d'être si étendues. Le peu de temps que j'ai eu a été cause de l'un et de l'autre. Je n'ai fait celle-ci plus longue que parce que je n'ai pas eu le loisir de la faire plus courte"

Though to be terribly pedantic, that letter is from 1656, the provincales were send from 56-57. To prove I'm not a chatbot full reference: Blaire Oascal, seizième lettre, 4 décembre 1656, in Œuvres complètes, texte établi et annoté pars Jacques Chevalier, Paris, Gallimard, coll. «Bibliothèque de la Pléiade», 34, 1962, p. 865

I understand the concern about human content being decimated by machine content, but comparing this endless wave of AI to the failure of Crypto is idiotic.

Both things don't intersect, at best models of generative art have emerged to kick NFTs out of the boat for good. Real humans have always had the free choice to write "plausible nonsense" anywhere, bearing this in mind, what is your real concern? I would say your concern is the quantity and ease of creating this type of content right now, but AI is just a tool, who gets to choose how to use the tool? we, the human nerd behind the screen.

We deal with spam and scams in our emails on a daily basis, all spread through programmed actions, but nobody has ever tried to end the programming languages that make this possible, because it doesn't make sense, they are not the real villain of the story.

Targeting AI models is not targeting the real villain.